Artifical Inteligence & Machine Learning

Inspired by neurons in brains… neural networks …machine that thinks like a human:

- …interpret data …learn from data …adapt & achieve specified goals

- Term origin: Dartmouth Conference on Artificial Intelligence (1956, John McCarthy, Marvin Minsky)

- “AI winter before ~2010” …until machine learning became viable

- …“enough” computing power available provided by compute-clusters

- …internet & social media (big data) provides input data (with labels)

Machine Learning

…part of AI (Artificial Intelligence)

Development of a learning system (…addresses a specific problem)

- Learning problem characterized by observation…

- …input- & output-data with unknown (coherent) relationship

- Goal: Find generalize mapping between input and output

Statistical learning… learning of a mapping function

- Given input data

X& output datay… - …find a function that approximates their relationship

- …form of the function unknown …hence need of a learning system

ML can be thought as the problem of function approximation…

- …learned mapping function will be imperfect

- …difficult to understand distance between the approximated function and the true (best) mapping

- …search for a “good enough” mapping function

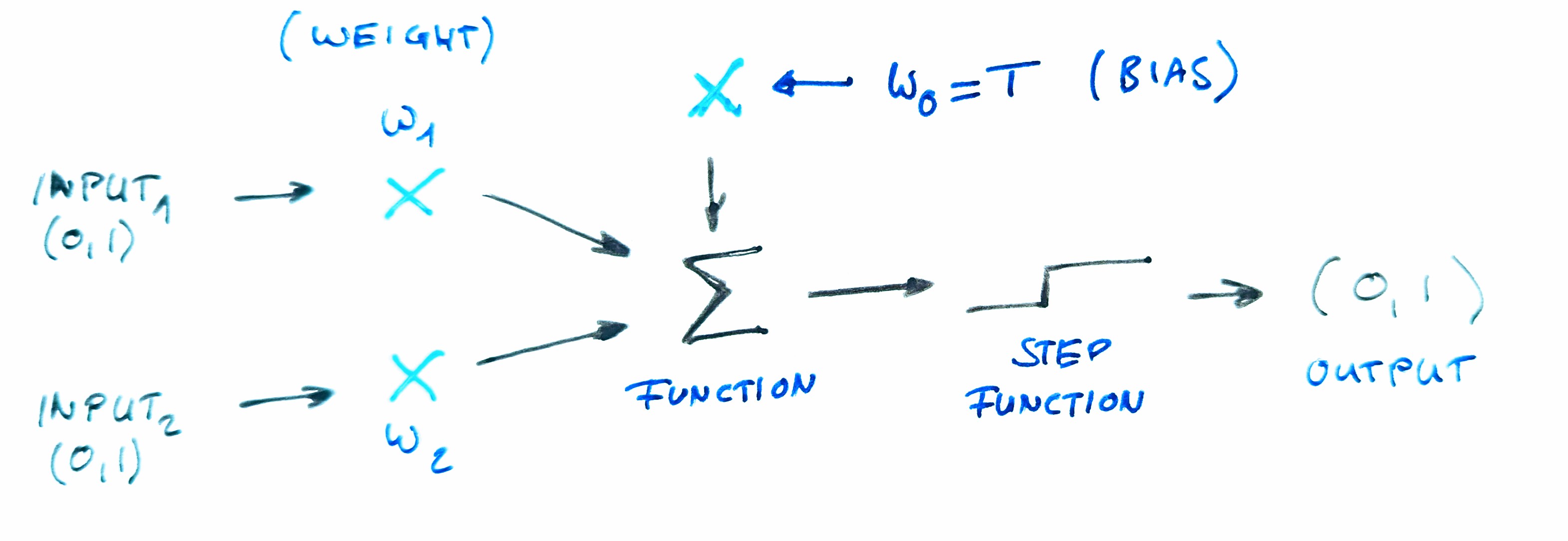

Example: Perceptron

Frank Rosenblatt (1958) builds perceptron …single-layer artificial neural network …trained with supervisor

- …servers as a model for a single neuron (binary classification)

- …determines if an input vector of numbers belongs to one of two classes

- Original task was to distinguish between a triangle and a circle

Components or the perceptron:

- Input …multiple features …represent characteristics or attributes

- Weights …each input associated with a weight

- …determines significant in influence

- …weights adjusted during training (to learn optimal value)

- Summation function …weighted sum of the inputs

- Activation function …Heaviside step function …compare sum to threshold

- Bias …adjustments independent of the input …additional parameter learned during training

- Weight update rule …perception learns by adjusting its weight and bias on a learning algorithm

Linear Regression

Used for a larger number of machine learning models

- Linear means …linear model which is

y=wX+b- …relationship between the (known) input data

Xaka feature - …and the target variable

yaka predictor - …

bis the bias andwis the weight of the feature

- …relationship between the (known) input data

- Regression means …model predicts continuous (quantitative) values

Goal: Find (infer) the best-fitting response between feature a predictor

More sophisticated model rely on multiple features …

- …each feature having a separate weight

- …aka multiple linear regression

Supervised Learning

…supervisor corrects mistakes …requires training examples

- Reinforcement Learning

- …agent learns based on rewards & penalties (aka feedback)

- …based on interactions with environment …autonomous decision-making

- Used in… robotics, optimization problems, game play

- Supervised Learning

- …predict outcomes based known input & output data

- …maps input to correct output based on provided labels

- Used in…

- …classification (e.g. SPAM detection, medical diagnosis)

- …regression (e.g price predictions)

- …recommendation systems (e.g. shopping, media streaming)

- Unsupervised Learning

- …discover patterns, structure & relationships in data

- …operates on unlabelled datasets …understand data without guidance

- Used in… clustering, anomaly detection, dimensionality reduction

- Semi-supervised Learning

- …bridges labeled & unlabeled data

Deep Learning

…based on (artificial) neural networks

- Employ methods of supervised, semi-supervised or unsupervised learning

- …complex data at large-scale …simulate (automate) human learning process

- …applies convolutional neural networks and transformers

- Used for…

- …computer vision …image classification …image analysis

- …natural language processing …translation

- …speech recognition …voice assistants

“deep” …number of layers through which the data is transformed

- …progressively extract higher-level features (from the input)

- …each layer transforms input in a more abstract way

- Example: image pixel …identify edges …arrangements …noes & eyes …recognize face

LLM

Large Language Model …belong to the field of natural language processing …process & understand human language (text)

What can LLMs do?

- …text generation …coherent & contextually relevant sentences

- …seamless multilingual translation (bridge language barriers)

- …sentiment analysis …evaluate sentiment in social media

- …summary of extensive documents …extract key information

- …image generation from text description with text

- …conversation (chat) with human-like behaviour

- …automate customer service interactions

- …code generation …assistance in software development

What technologies do LLMs use?

- …deep learning …neural networks

- …multimodal …generate text, images, and code

- …self-supervised learning from language data

- Architecture influenced by…

- …model size & parameter count (billions)

- …input representation …decoding …output generation

- …computational efficiency

- …self-attention mechanism …training objectives

Consist of multiple layers…

- Feed-forward layers …process input data

- Embedding layers …represent words (tokens) as dense vectors

- Attention layers

- …weight importance of tokens in a sequence

- …capture entity relationships in text

Transformers

References:

…backbone of LLMs …GPTs (Generative Pre-trained Transformers)

- …pre-trained on large, general-purpose datasets

- …fine-tuned for specific tasks after training

- Advantage …parallelization reading input training data

- Therefore …computationally efficient for large-scale language tasks

How does GPT processes words and sentences?

- Word embeddings …represent words as word embeddings

- …segment words (text) into discrete units

- …the word embedding captures the semantic association of words

- …encode meaning …relationships between words

- Example: “apple” is close to “fruit” but far from “car”

- Word embeddings represented by …numerical vectors

- …aka numerical embeddings (tokens) …used as input to a LLM

- Process often called tokenisation

- Transformer layers …process sequences of word embeddings (tokens)

- …multiple layers of transformer blocks …output a new sequence of vectors

- …capture relationship with other words …context, dependencies, semantic nuances

- Positional encoding …process order of tokens

- Attention mechanism …multi-head attention

- …weight importance of each word …captures contextual information

- …tokens contextualized in context window (long-range dependencies)

- …amplify important tokens & diminish less relevant tokens

- Text generation …word by word related to a input prompt

- …words chosen based on probabilities learned during training

- …generates coherent sentences based on learned patterns

GAN

Generative Adversarial Networks …for images

Todo…

RNN

Recurrent Neural Networks …for music

Datasets

LIAON (Large-scale Artificial Intelligence Open Network) 1…

- …non-profit organization …based in Hamburg, Germany

- …datasets = indexes to the Internet …image URL + text linked to image

- …recommend img2dataset 2 to download image subsets

Hugging Face Hub 3 hosts datasets mainly in text, images, and audio

Footnotes

LAION

https://laion.ai↩︎img2dataset, GitHub

https://github.com/rom1504/img2dataset↩︎Hugging Face Hub

https://huggingface.co↩︎